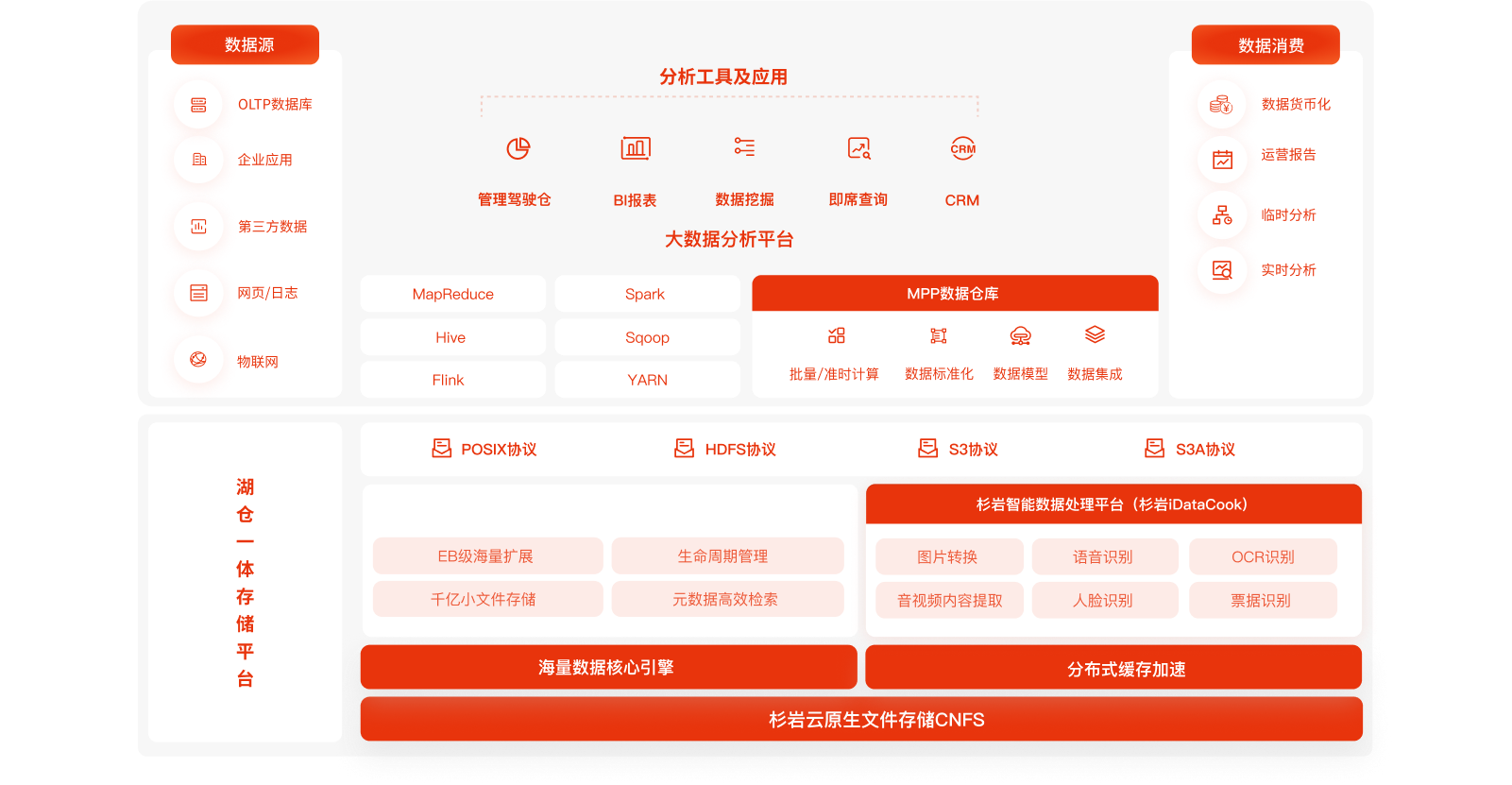

Addressing storage issues in different storage scenarios and data formats

MOS

HotUSP

CNFS

Provide efficient, intelligent, and reliable data management solutions

iDataFusion

iDataExplorer

CMS

Quick retrieval and invocation of business, enabling data to unleash greater value

IDM

HotiDataCook

-

Industry Solutions

Industry Solutions

-

General Solutions

General Solutions